Founded in Sydney in 1996, Appen is a technology company that collects and improves data for the purposes of training and developing machine learning and artificial intelligence systems. During my time at Appen, a significant part of my work involved a diverse range of projects for both automatic speech recognition (ASR) and text-to-speech (TTS) technologies, including phonetic transcription, grapheme-to-phoneme or letter-to-sound (L2S) rule development, and part-of-speech (POS) annotation.

To break down these language technologies further, ASR enables computers to process human spoken language into readable text, allowing users to operate devices through speech or facilitate translation of that speech into other languages. Conversely, TTS generates an audio version of a written text. TTS can be used to improve accessibility, particularly for those with vision impairments or learning disabilities such as dyslexia.

Language technology relies heavily on good-quality data, and the table below illustrates just some of the processes used in Appen projects, with use cases they can be applied to.

| Process Type | Description | Example Use Case |

|---|---|---|

| Phonetic Transcription | The process of transcribing words in a lexicon according to their sound (pronunciation lexicon), through the use of a phonetic alphabet such as the International Phonetic Alphabet (IPA) or X-SAMPA (a phonetic script that uses symbols all found on a standard keyboard, aiding in native-speaker transcription). Suprasegmental features such as stress, tone, vowel length, pitch accent and syllabification can also be encoded where relevant to the language. | Use in ASR: Appen developed language packs in 26 languages for the Intelligence Advanced Research Projects Activity (IARPA) Babel program, facilitating improvement in speech recognition performance for languages other than English which have very little transcribed data. These projects generally have data collection, orthographic transcription and phonetic transcription components, the latter using X-SAMPA annotation. Further reading: IARPA - Babel Example dataset: IARPA Babel Mongolian Language Pack Use in TTS: In collaboration with health service providers GuildLink and MedAdvisor, Appen produces TTS audio of Australian Consumer Medicine Information (CMI) documents. This audio is available at medsinfo. Further reading: GuildLink Makes Consumer Medicines Information More Accessible |

| Grapheme-to-Phoneme or Letter-to-Sound (L2S) Rule Development | A set of rules for a given language that map between orthography and pronunciation, used to generate phonetic transcriptions from an input text. These rules can be both single and multi-letter mappings such as orthographic <s> → X-SAMPA /s/ and <sh> → /S/ in English. The rules can refer to the surrounding environment which allows for alternative phonetic mappings based on the characters’ position in a word, surrounding morphemes, or other linguistic processes that impact pronunciation. For example, rules are implemented to specify when <s> should be pronounced as /z/ as in ‘roses’ or as /s/ as in ‘sit’. Suprasegmental features such as stress, tone, vowel length, pitch accent and syllabification may also be encoded, however, the accuracy of these in the output pronunciations depends on the given language and the extent to which these features are predictable from orthography alone. | As was the case for the IARPA Babel program, one of the first steps in producing pronunciation lexicons involves running the wordlist through a language or dialect-specific L2S algorithm to generate hypothesised X-SAMPA pronunciations of each word. For many of the 26 languages in Babel, these rules didn’t yet exist and had to be developed through research, consultation and iterative testing with native speaker linguists. The automated output is then checked and edited as needed by native speaker linguists. L2S rules may be further developed according to customised requirements, for example, additional pronunciation variants for dialectal, colloquial or fast speech on top of the canonical pronunciation. |

| Part-of-Speech (POS) Annotation | The process of annotating each word in a text with its grammatical part of speech, for example, noun, verb, depending both on the word’s definition and its context within the wider phrase or text. A defined set of labels is used according to the grammar of the language being annotated. POS lexicons may be used in conjunction with pronunciation lexicons to further improve natural language processing models for both ASR and TTS. They also assist in named-entity recognition (NER) for large corpora in cases where proper nouns are given additional sub-tags such as person, organisation and location. | In collaboration with Larrakia Nation, Appen assisted in linking two databases (text and audio) that previously could only be accessed independently, and had minimal time alignment between the audio and Larrakia and English text. One of the components of this project involved reviewing the POS annotations in the database, through a series of automated and manual checks, to identify and correct genuine errors or inconsistencies in notation from the original field notes, so that these were not present in the final version. Further reading: Preserving Language Through Useable Data and Phonetic Annotation |

In all projects, data management is an important factor, both throughout the life of the project, as well as following its completion. The latter is particularly important in cases where Appen retains the license to resell the datasets.

In this case study, I’ll look at both of these aspects, outlining some of the challenges of data management and how these are overcome at Appen.

Data Management during the Life of a Project

Due to the variety of services, data types and customisation capabilities provided by Appen, it is difficult to present a single standard approach to data management. Instead, I’ll focus on the processes that were developed for datasets that have both orthographic and phonetic transcription components, also known as transcription and lexicon multi-component projects. The basic procedure for these projects is outlined below:

The client or project sponsor specifies the parameters for the dataset and its collection in consultation with Appen specialists.

Appen carries out data collection, usually in the form of conversational or scripted telephony, VoIP or microphone recordings with qualified participants from Appen’s crowd of more than a million. Conversational data refers to spontaneous or unscripted natural speech on a variety of topics either over the telephone or with both participants in the same room, while for scripted data, participants read and respond to a set text of prompts, curated to facilitate topic, domain, keywords, key phrases and phonetic coverage. All participants are provided with a consent form explaining the purpose of the collection and how the data will be used, and only take part in the collection if they are happy and comfortable to do so, and if they sign the consent form. Personal data such as names are anonymised and sensitive personal data is not collected.

The audio data is then quality-checked to ensure it meets the requirements for the collection, including demographic balance, language and content, background noise levels, audio levels and recording duration.

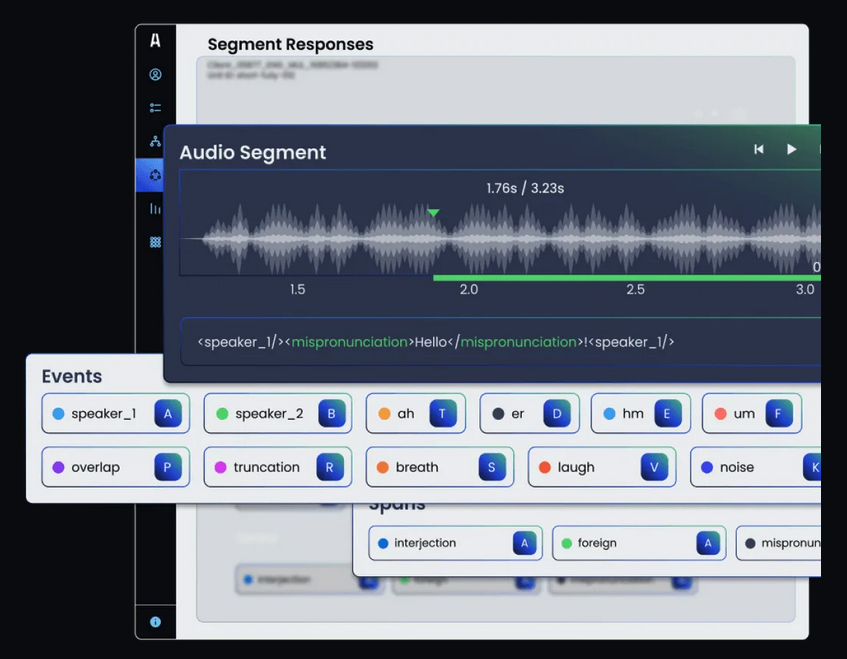

The approved audio goes through some pre-processing steps to batch the data into smaller segments, then is orthographically transcribed by the transcription (TX) team. Timestamps are checked at this stage as well to ensure correct alignment of the audio and the transcribed text, and a variety of labels are added to capture speaker and non-speaker noise events (e.g. laugh, cough, background noise). Rigorous quality assurance processes are applied while the transcription is ongoing and on the resulting complete data set.

Image Source: Appen

Image Source: Appen

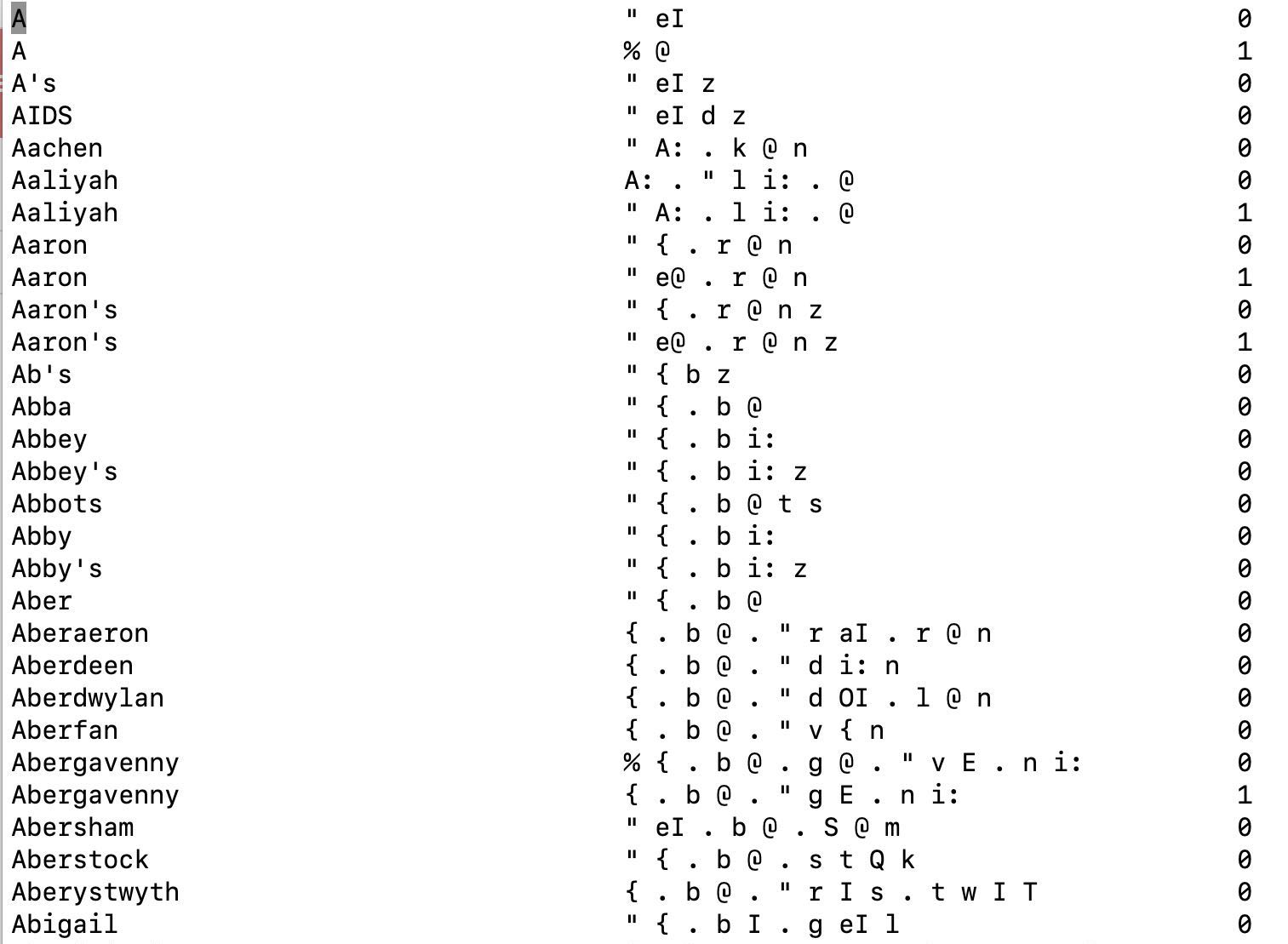

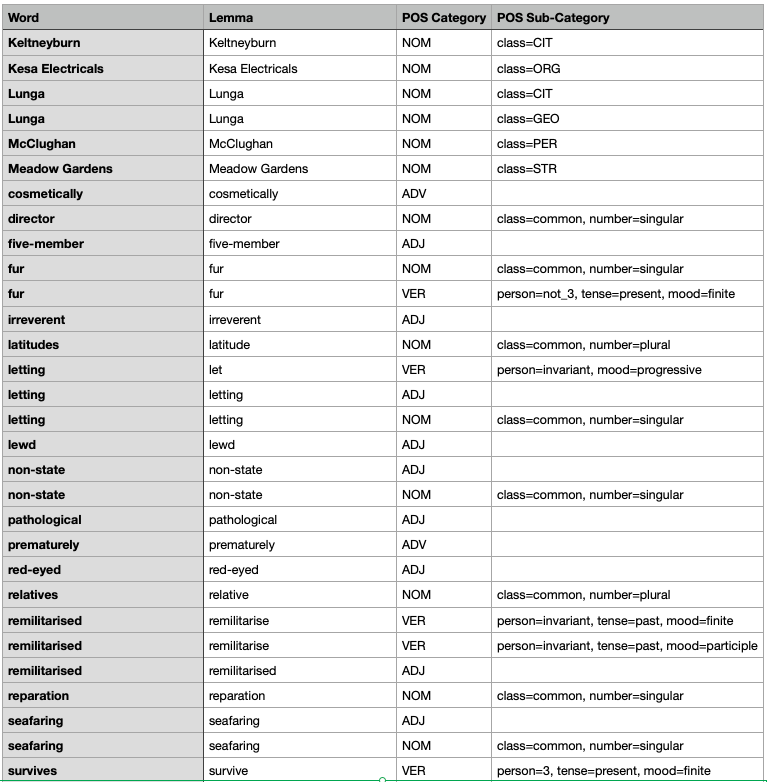

- A sorted list of unique word forms (e.g. ‘house’, ‘houses’) occurring in the dataset is created from the orthographically transcribed data and sent to the lexicon (LX) team, who work with a group of native speaker linguists to prepare phonetic transcriptions of the words, either in X-SAMPA or another phonetic script. If a POS lexicon is also required for the dataset, this is similarly created from the sorted list of unique word forms and checked by native speaker linguists.

Image Source: Appen

Image Source: Appen

Image Source: Appen

Data across each stage is validated for quality assurance.

The audio, transcription and lexicon(s) are packaged for delivery.

As shown above, the data for a single project goes through multiple stages of annotation and requires specialist linguist expertise in the data management process before each part can be combined for final delivery.

One major challenge in this process is dealing with errors and inconsistencies that arise both prior to, and during, the validation stage, particularly where data is shared between the TX and LX teams. As transcribers and linguists prepare the annotations, variations may arise in features like spelling, capitalisation and punctuation. Languages may have no or multiple accepted orthographic conventions, and a standard approach must be determined for the project and adhered to. Conversely, other languages may already have standard orthographic conventions, but spelling or other errors may be introduced at one stage of transcription and identified at another stage.

Once a variation is identified and a decision on approach has been made, this change is applied to the whole of the transcription before an updated wordlist is prepared for the lexicon. This spelling standardisation phase is inherently iterative and in order to reduce the number of iterations, robust processes were developed to automatically identify many of the variations and then track that the agreed final spelling forms were applied.

One example of these automated spelling standardisation processes is a Python script run by the LX team which identifies entries with identical pronunciations but different orthographies in a lexicon. In some cases, these matches are expected (e.g. ‘red’ and ‘read’ in English would both have an X-SAMPA transcription /" r E d/), while in other cases, it identifies either errors in spelling (e.g. ‘colourr’) or variation in need of standardisation (e.g. ‘colour’/‘color’). The output of potential errors is reviewed by a native speaker and once legitimate inconsistencies are identified, these are sent back to the TX team so that they can be updated in the transcription data and a new wordlist generated for the lexicon. The process for making these updates is automated as much as possible but linguistic expertise is required at every stage to ensure the corrections are appropriately applied. This close collaboration of the teams responsible for the transcription and lexicon components is integral to producing high-quality speech databases with optimised data management practices.

Data Management for Pre-Labeled Datasets

Image Source: Appen

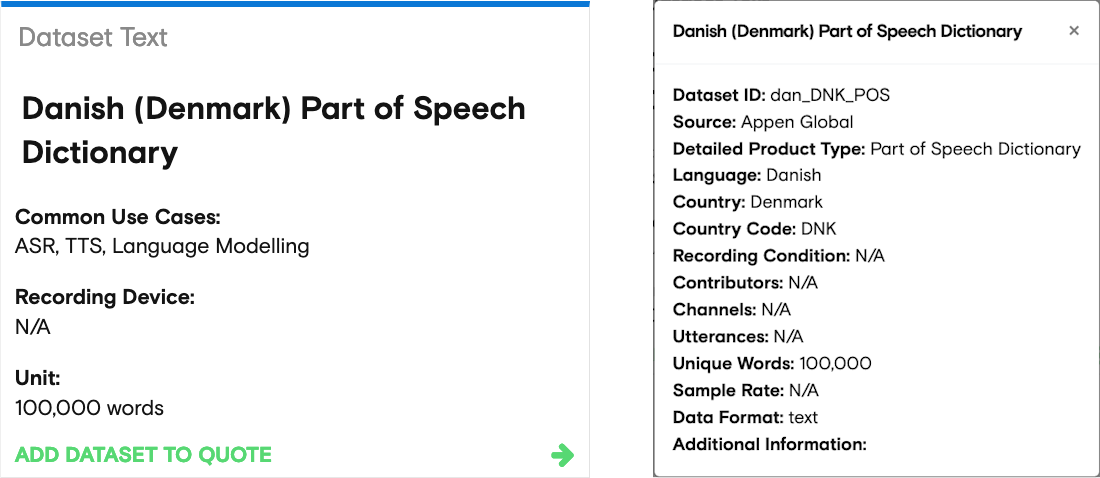

At the time of writing, Appen has over 280 audio, image, video and text datasets in over 80 languages available as pre-labeled datasets. These datasets are publicly accessible and can be filtered according to several categories: product type, common use cases, language and number of hours of audio, word or image count, if applicable (called Unit in the table below). Combined with a standard search function, this filtering allows interested parties to further refine their query for data most applicable to their needs.

| Product Type | Common Use Cases | Language | Unit |

|---|---|---|---|

|

| A-Z | A measure of the volume of the dataset, either in hours or word count. |

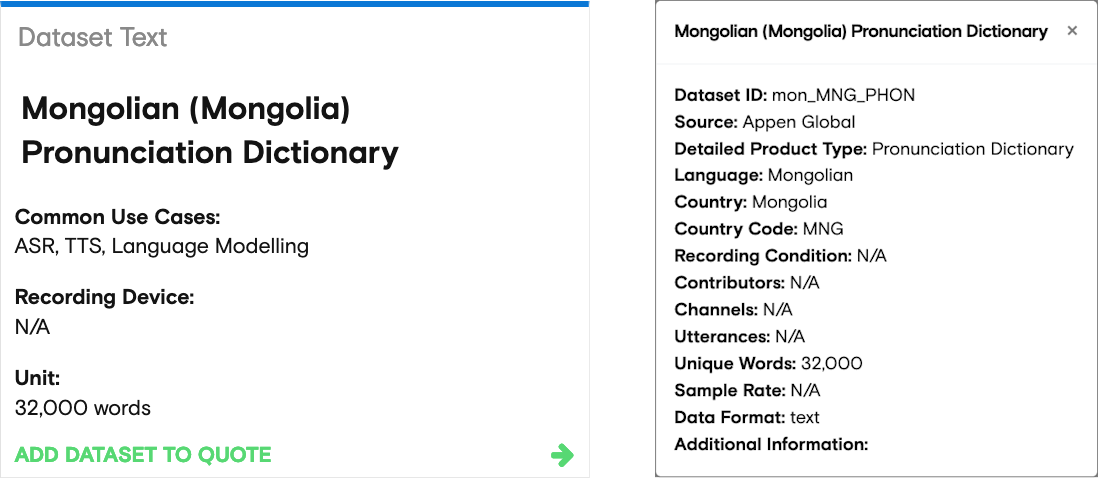

Each listing contains further details about the dataset, particularly in relation to aspects of the data collection, including the specifics of the language collected, the volume of the dataset, the number of contributors involved, recording conditions and the file formats available. Additional details appear in a pop-up window when you click on the “tile” for a given dataset. For example:

Catalogue entries for a Danish POS dataset and a Mongolian pronunciation dictionary:

Image Source: Appen

Image Source: Appen

This is a helpful starting point for browsing and refining the dataset selection, but in order to find the most suitable match, clients consult directly with Appen to discuss their language technology needs in full.

More detailed metadata for each dataset is tracked internally and this is used to further assist with client queries and requests. Some of the additional metadata categories recorded include the demographics of the contributors for each dataset, such as the age range and gender distribution of the participants, the dialect coverage within a specific language, the year of collection, and the domains or topics discussed in the collection.

Using the metadata as filters, requests can be divided into three alternative outcomes:

Dataset(s) matching all requirements are available and a sample of the data is shared with the client to confirm this is the case.

Dataset(s) matching only some of the requirements are available and samples of these are shared in case they are still applicable to the project.

No dataset matching the requirements is available and a quote is then prepared for producing a new dataset or enhancing an existing dataset.

In terms of dataset storage, collections are catalogued first according to language and second by dataset type. This method works particularly well for pre-labeled datasets, because, if nothing else, clients usually know which language(s) they want data for and other specifics can follow from there.

Conclusions

Both in cases where new datasets are being created and finalised collections made ready for cataloguing, data management is a crucial consideration at Appen and, more broadly, any language technology project. Two main issues that are important to consider for data collections are:

Ensuring that collections with multiple component parts are organised in such a way that updates can be applied to the whole, rather than introducing inconsistencies between related data.

Determining the parties that will be using the repository and assuring that the methods of accessing data are intuitive for all groups.

These issues are handled in the Appen workflow through the use of iterative semi-automated spelling checks and other similar processes which enable modifications to be integrated into all levels of the dataset, and through clear recording and accessibility of the metadata describing a collection, available both to Appen staff and its clients.

For LDaCA, these issues are of equal importance for wider data management. To the first point, while it is ultimately the responsibility and prerogative of the data contributor to decide how their data should be organised, LDaCA can also assist with this process. Guidance is available on best practices for data management and organisation of metadata, both in the form of the documentation available at LDaCA Resources, as well as automated validation of metadata category requirements for datasets added and edited through Crate-O. To the second point, LDaCA has a responsibility to ensure that tools to facilitate data management and discoverability are accessible and intuitive for all, including the portals that use the Oni application to access data packaged as RO-Crates. This should be an iterative process, with further improvements to operability implemented based on user feedback and needs.